Snowflake Notebooks in Workspaces¶

Overview¶

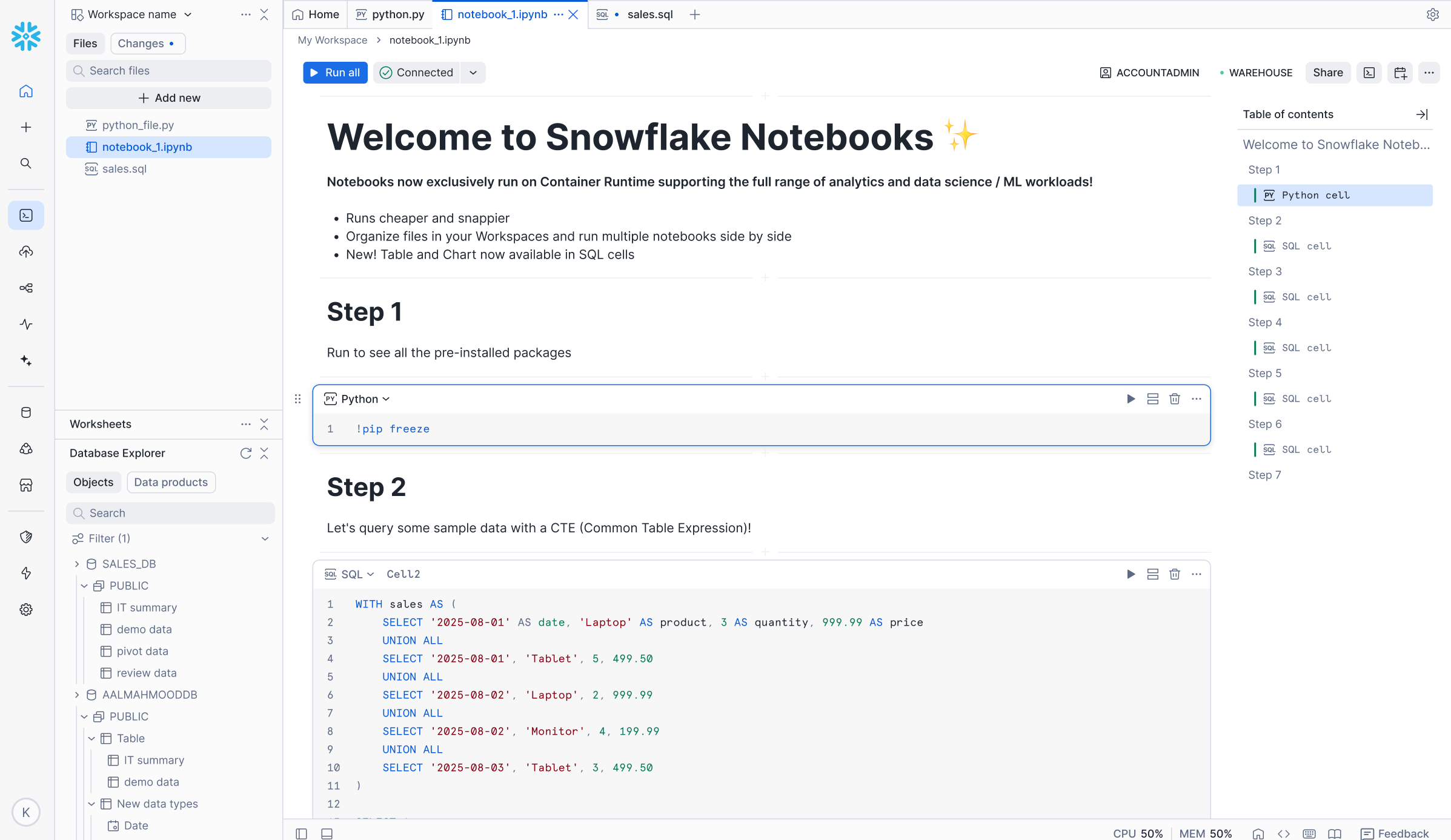

The new Snowflake Notebooks experience in Workspaces offers enhanced performance, improved developer productivity, and Jupyter compatibility. The Workspaces environment supports easy file management, allowing you to iterate on individual notebooks and project files. Create folders, upload files, and organize notebooks. Notebook files open in tabs in your workspace and are editable and executable.

This new offering includes:

Familiar Jupyter experience - Supports a Jupyter notebook environment with direct access to governed Snowflake data.

Enhanced IDE features - Editing tools, file management, and access to terminal for increased productivity.

Powerful for AI/ML - Runs in a pre-built container environment optimized for scalable AI/ML development with fully-managed access to CPUs and GPUs.

Governed collaboration - Allows multiple users to work in the same workspace with role-based access controls and version history through Git-integrated workspaces or Shared workspaces.

Schedule and orchestration - Use the native scheduler or incorporate notebooks into orchestration scripts for production pipelines.

Benefits for machine learning (ML) workflows¶

Notebooks in Workspaces provides two primary capabilities for ML workflows:

End-to-end workflow - The platform enables users to consolidate their complete ML lifecycle, from source data access to model inference, within a single Jupyter notebook environment. This environment is integrated with the underlying data platform, allowing it to inherit existing governance and security controls for the data and code assets.

Scalable model development architecture - The architecture supports the development of scalable models by providing open-source software (OSS) model development capabilities. Users can access distributed data loading and training across designated CPU or GPU compute pools. This design simplifies ML infrastructure management by abstracting the need for manual configuration of distributed compute resources.

For more information about Snowflake ML, see Snowflake ML: End-to-End Machine Learning.

Get started¶

To get started, watch this introduction video and use this quickstart.