Cortex Playground¶

The Cortex Playground lets you compare text completions across the multiple large language models available in Cortex AI. You can test language model responses across prompts and model settings, and perform side-by-side comparisons of model outputs. With a few clicks, you can also connect the model to a Snowflake table to experiment directly on your data. The Cortex Playground is purpose-built to help you easily test how different language models perform for your use case before you decide which model to deploy into production.

The Cortex Playground supports all of the models available for the COMPLETE function that are available in your account’s region. For the complete list of models, see Model availability.

Required privileges¶

The Cortex Playground requires the CORTEX_USER database role that includes the privileges to call Snowflake Cortex LLM functions. For more information, see Cortex LLM privileges.

Get started with the Cortex Playground¶

The Cortex Playground is accessible from the Snowflake AI & ML Studio. You can access the studio from Snowsight as follows:

Sign in to Snowsight.

In the navigation menu, select AI & ML » AI Studio. The Cortex Playground appears among other the other Studio functions.

To open the playground, select Try.

Test your prompt with a language model¶

Use the Cortex Playground to test prompts across different language models.

Select a warehouse. This warehouse is used to run the SQL command that calls the COMPLETE function.

Select a model from the dropdown menu at the top. The drop down menu includes only the models that are available in the region of the account being used.

Enter your prompt in the prompt box and select Enter.

The model output appears above the prompt box. You can select View Code to see and copy the SQL command used to process your prompt.

To try a different prompt or model, choose the desired model and enter a new prompt in the prompt box, then select Enter.

Compare model outputs¶

To compare the output of your prompts between two different models or two different settings of the same model, use the Compare feature.

Compare two models¶

Select Compare in the top right corner.

Select different models for the two panels using the dropdown menu on each side.

Open the settings panel by selecting Change settings

next to Compare.

next to Compare.Select the Sync toggle to use the same settings for the two models.

Enter your prompt and select Enter. The output from the models you selected appears on each side.

Compare settings for one model¶

Select Compare in the top right corner.

Select the same model for the two panels.

Open the settings panel by selecting Change settings

next to Compare.

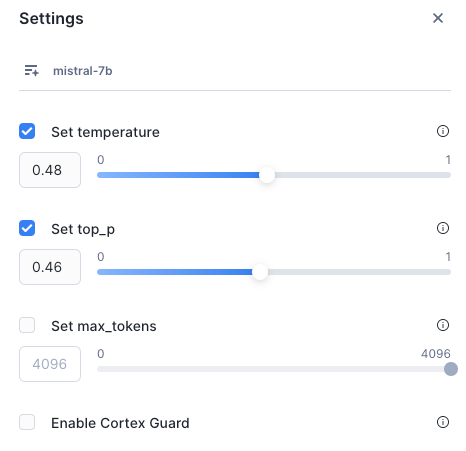

next to Compare.Choose different settings for temperature, top_p or max_tokens for each tab to compare how the language model response changes with different model settings. For more details on these parameters, see COMPLETE (SNOWFLAKE.CORTEX).

You can also check Enable Cortex Guard to implement safeguards that filter out potentially inappropriate or unsafe large language model (LLM) responses. For more details on Cortex Guard, see Cortex Guard.

Enter your prompt and select Enter. The output from the model for each set of settings appears on each side.

Connect to Snowflake tables¶

You can connect the model to a Snowflake table with textual data that you want to test with text completion.

Note

You can select only one column. The Cortex Playground returns at most 100 rows.

Select the + Connect your data button in the prompt box.

Select your Snowflake data source from the drop down menu.

Select the column with the textual data you want to test.

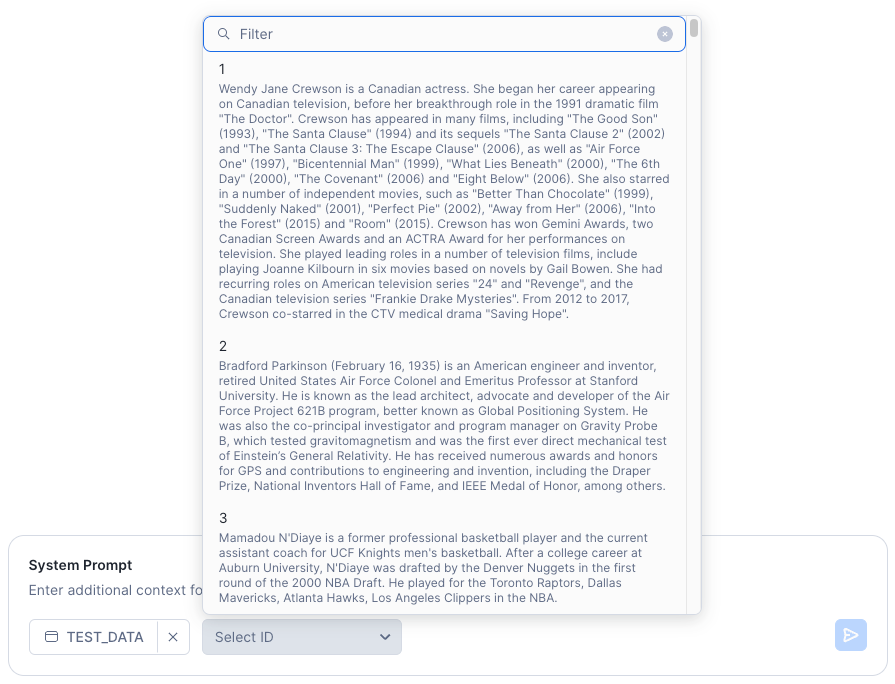

Select a column to use as a filter. You can use this column to select a record from your data source.

Select Done.

Select a record from your data source using the Select <filter column> field in the prompt box. You can select a record by scrolling or by searching for a term in the text data. To search, enter a term in the search box. The following example shows a filter column named ID. In this example, you could search for a particular ID number or enter a string to match the text data.

Enter a System Prompt and select Enter to see the model response. A system prompt provides instructions to the model on how to process the input text. For example, you might want the model to summarize the selected text or pull out keywords from it.

Controlling settings¶

You can adjust model settings to compare how the language model response changes when provided with different temperature, top_p, and max_tokens settings. To implement safeguards that filter out potentially inappropriate or unsafe responses, select Enable Cortex Guard in the settings panel.

You can read more about how these settings potentially impact language model responses in the Controlling temperature and tokens page.

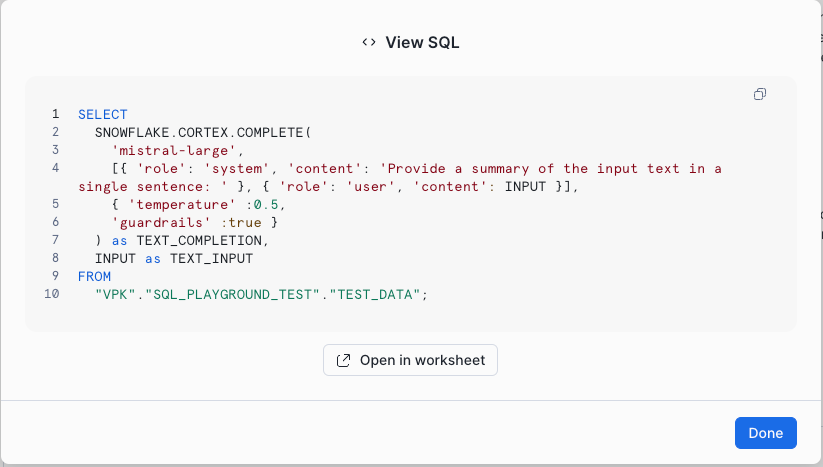

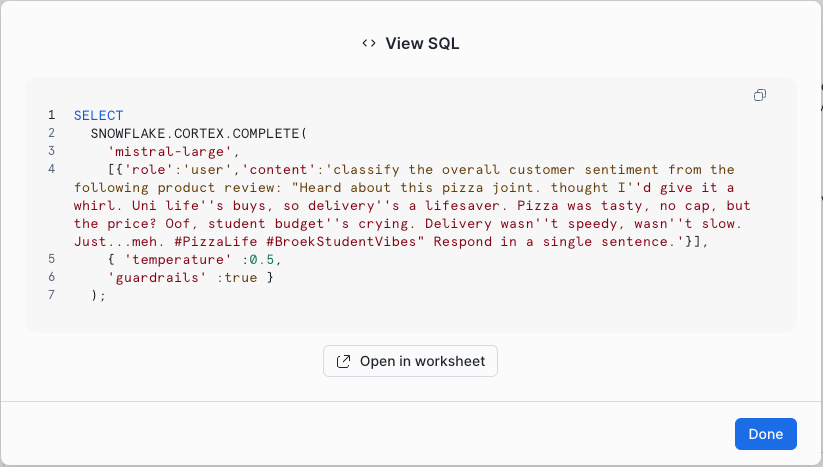

Exporting a SQL query¶

To get a SQL query that includes the settings, such as temperature, that you’ve defined in the Cortex Playground, select View Code after any model response. The displayed code can be executed from a worksheet or notebook, or automated for continuous execution using streams and tasks. You can also use this code with a dynamic table.

Note

Dynamic tables do not support incremental refresh with COMPLETE.

The following images show examples of the View SQL dialog.

Example 1: Exporting code when you connect the model to a Snowflake table¶

Example 2: Exporting code when you do not connect the model to a Snowflake table¶