Snowflake Notebooks 정보¶

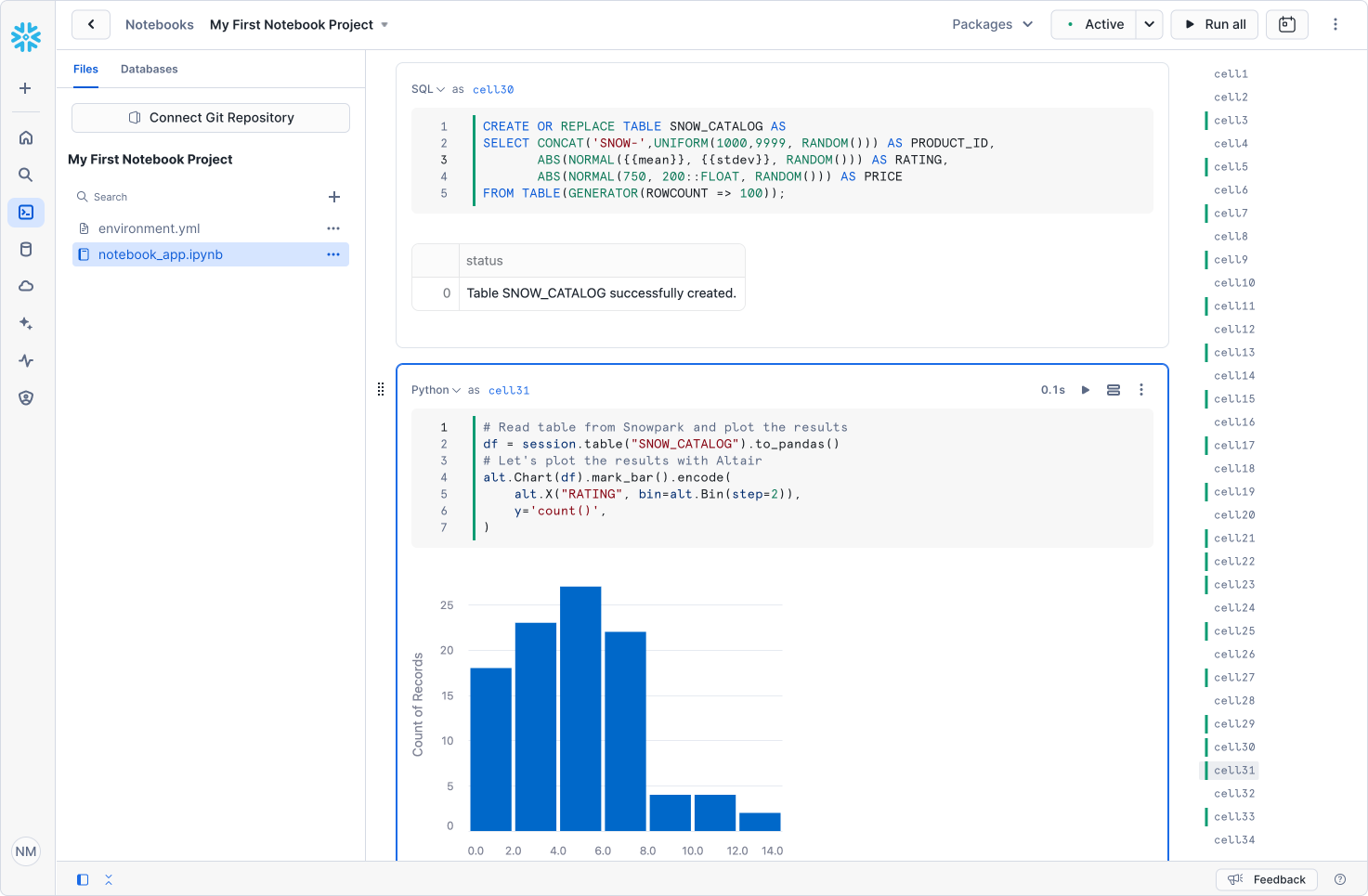

Snowflake Notebooks 는 Python, SQL , Markdown을 위한 대화형 셀 기반 프로그래밍 환경을 제공하는 통합 개발 인터페이스(Snowsight)입니다. Notebooks에서는 Snowflake 데이터를 활용해 탐색적 데이터 분석을 수행하고, 머신 러닝 모델을 개발하고, 기타 데이터 과학 및 데이터 엔지니어링 워크플로를 수행할 수 있으며, 모두 동일한 인터페이스 내에서 가능합니다.

이미 Snowflake에 있는 데이터를 탐색하고 실험하거나 로컬 파일, 외부 클라우드 저장소 또는 Snowflake Marketplace 의 데이터 세트에서 새 데이터를 Snowflake에 업로드할 수 있습니다.

SQL 또는 Python 코드를 작성하고 셀 단위로 개발 및 실행하여 결과를 빠르게 비교할 수 있습니다.

Streamlit 시각화와 Altair, Matplotlib, seaborn과 같은 다른 라이브러리를 사용하여 데이터를 대화형으로 시각화할 수 있습니다.

Git과 통합하여 효과적인 버전 관리로 협업하십시오. 노트북을 Git 리포지토리와 동기화하기 섹션을 참조하십시오.

마크다운 셀과 차트를 사용해 결과를 맥락화하고 다양한 결과에 대한 메모를 작성하십시오.

예약된 일정에 따라 노트북을 실행해 파이프라인을 자동화하십시오. 노트북 실행 예약 섹션을 참조하십시오.

Snowflake에서 제공되는 역할 기반 액세스 제어 및 기타 데이터 거버넌스 기능을 사용하여 역할이 동일한 다른 사용자들이 노트북을 보고 공동 작업을 수행할 수 있도록 할 수 있습니다.

참고

비공개 노트북은 더 이상 사용되지 않으며 더 이상 지원되지 않습니다. :doc:`작업 공간</user-guide/ui-snowsight/workspaces>`의 새로운 Snowflake Notebooks 환경은 기능이 개선된 유사한 비공개 개발 환경을 제공합니다. 미리 보기에 등록하는 데 관심이 있는 경우 Snowflake 계정 팀에 자세한 내용을 문의하세요.

노트북 런타임¶

Snowflake Notebooks offer two distinct runtimes, each designed for specific workloads: Warehouse Runtime and Container Runtime. Notebooks utilize compute resources from either virtual warehouses (for Warehouse Runtime) or Snowpark Container Services compute pools (for Container Runtime) to execute your code. For both runtimes, SQL and Snowpark queries are always executed on the warehouse for optimized performance.

Warehouse Runtime은 친숙하고 일반적으로 사용 가능한 웨어하우스 환경에서 가장 빠르게 시작할 수 있는 방법을 제공합니다. Container Runtime은 SQL 분석 및 데이터 엔지니어링을 비롯한 다양한 유형의 워크로드를 지원할 수 있는 더욱 유연한 환경을 제공합니다. Container Runtime에 기본적으로 필요한 패키지가 포함되어 있지 않으면 추가 Python 패키지를 설치할 수 있습니다. 컨테이너 런타임은 CPU 및 GPU 버전으로도 제공되며, 널리 사용되는 ML 패키지가 사전 설치되어 있어 ML 및 딥러닝 워크로드에 이상적입니다.

다음 테이블은 각 런타임 유형에 대해 지원되는 기능을 보여줍니다. 이 테이블을 사용하여 사용 사례에 적합한 런타임을 결정하는 데 도움을 받을 수 있습니다.

지원되는 기능 |

웨어하우스 런타임 |

Container Runtime |

|---|---|---|

컴퓨팅 |

커널은 노트북 웨어하우스에서 실행됩니다. |

커널은 컴퓨팅 풀 노드에서 실행됩니다. |

환경 |

Python 3.9 |

Python 3.10(미리 보기) |

기본 이미지 |

Streamlit + Snowpark |

Snowflake Container Runtime(CPU 및 GPU 이미지에 Python 라이브러리가 사전 설치되어 있음). |

추가 Python 라이브러리 |

Snowflake anaconda를 사용하거나 Snowflake 스테이지에서 설치합니다. |

|

편집 지원 |

Python, SQL 및 마크다운 셀을 사용합니다. | Reference outputs from SQL cells in Python cells and vice versa. | Streamlit과 같은 시각화 라이브러리를 사용합니다. |

웨어하우스와 동일 |

액세스 |

노트북에 액세스하고 편집하려면 소유권이 필요합니다. |

웨어하우스와 동일 |

지원되는 노트북 기능(아직 미리 보기로 제공됨) |

Git 통합(미리 보기) | 예약(미리 보기) |

웨어하우스와 동일 |

Container Runtime에서 노트북을 만들고, 실행하고, 관리하는 방법에 대한 자세한 내용은 Notebooks on Container Runtime 섹션을 참조하십시오.

Notebooks 살펴보기¶

Snowflake Notebooks 도구 모음은 노트북을 관리하고 셀 표시 설정을 조정하는 데 사용되는 제어 기능을 제공합니다.

제어 |

설명 |

|---|---|

|

Package selector: 노트북에서 사용할 패키지를 선택하고 설치합니다. 노트북에서 사용할 Python 패키지 가져오기 섹션을 참조하십시오. |

|

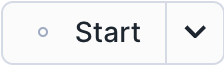

Start: 노트북 세션을 시작합니다. 세션이 시작되면 이미지가 Active 로 변경됩니다. |

|

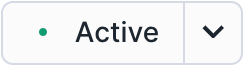

Active: 버튼 위로 마우스를 가져가면 실시간 세션 세부 정보와 집계된 리소스 소비 메트릭(메모리 사용량 및 CPU/GPU 사용량 메트릭은 Container Runtime 노트북에 대해 표시됨)을 볼 수 있습니다. 아래쪽 화살표를 선택하여 세션을 다시 시작하거나 종료하는 선택 사항에 액세스합니다. Active 를 선택하여 현재 세션을 종료합니다. |

|

Run All/Stop: 모든 셀을 실행하거나 셀 실행을 중지합니다. Snowflake Notebooks 에서 셀 실행 섹션을 참조하십시오. |

|

Scheduler: 향후 노트북을 작업으로 실행할 일정을 예약 설정합니다. 노트북 실행 예약 섹션을 참조하십시오. |

|

Vertical ellipsis menu: 노트북 설정을 사용자 지정하고, 셀 출력을 지우고, 노트북을 복제, 내보내기, 삭제합니다. |

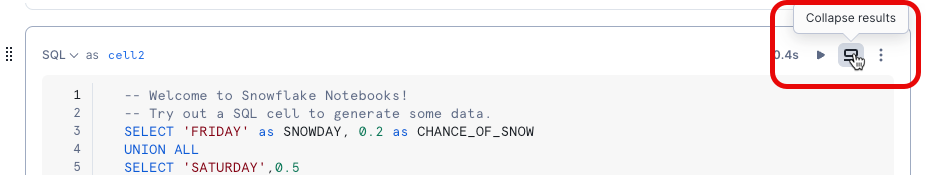

노트북의 셀 접기¶

셀에 있는 코드를 축소하면 출력 내용만 볼 수 있습니다. 예를 들어, Python 셀을 축소하여 코드에서 생성된 시각화만 표시하거나 SQL 셀을 축소하여 결과 테이블만 표시할 수 있습니다.

- 표시되는 내용을 변경하려면 Collapse results 를 선택합니다.

드롭다운에서는 셀의 특정 부분을 축소할 수 있는 옵션을 제공합니다.