Container Runtime¶

Overview¶

Container Runtime is a set of preconfigured customizable environments built for machine learning on Snowpark Container Services, covering interactive experimentation and batch ML workloads such as model training, hyperparameter tuning, batch inference and fine tuning. They include the most popular machine learning and deep learning frameworks. Used with Snowflake notebooks, they provide an end-to-end ML experience.

Execution environment¶

The Container Runtime provides an environment populated with packages and libraries that support a wide variety of ML development tasks inside Snowflake. In addition to the pre-installed packages, you can import packages from external sources like public PyPI repositories, or internally-hosted package repositories that provide a list of packages approved for use inside your organization.

Executions of your custom Python ML workloads and supported training APIs occur within Snowpark Container Services, which offers the ability to run on CPU or GPU compute pools. When using the Snowflake ML APIs, the Container Runtime distributes the processing across available resources.

Distributed processing¶

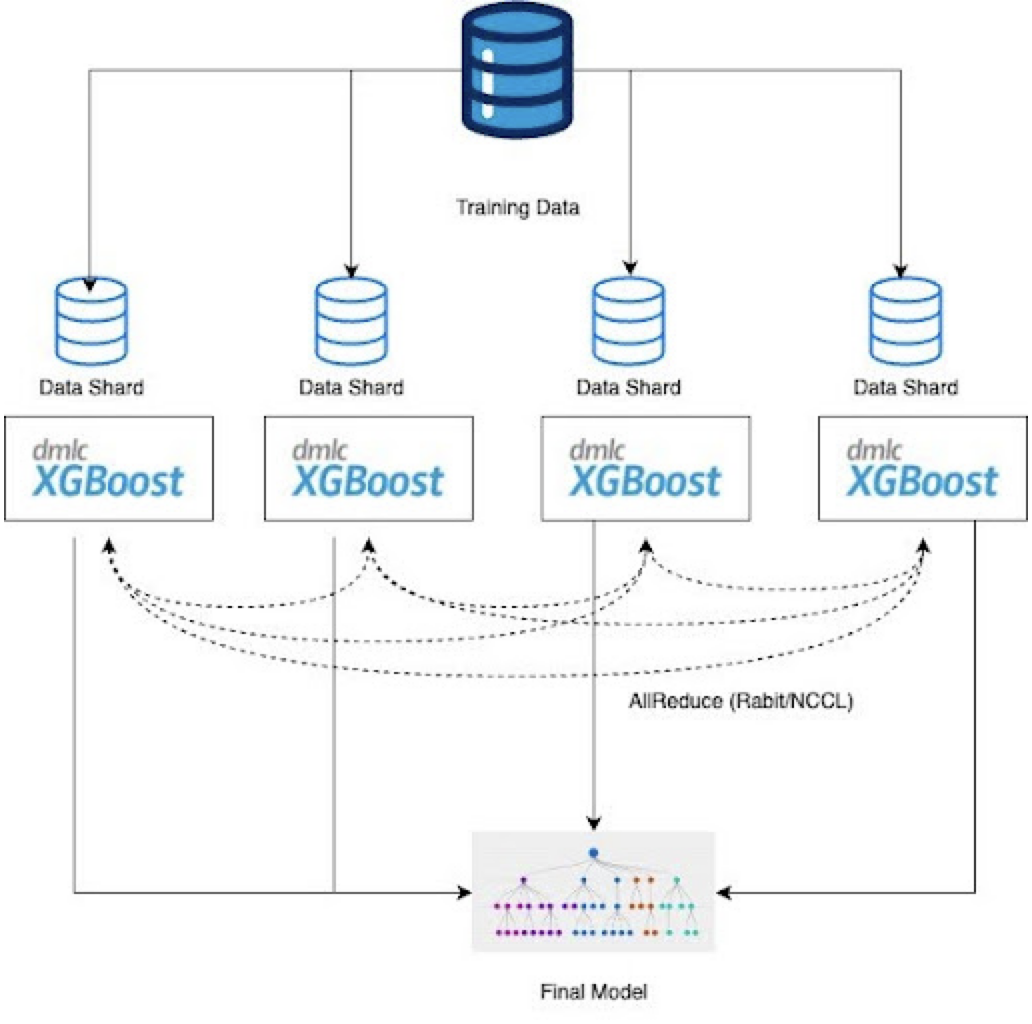

The Snowflake ML modeling and data loading APIs are built on top of Snowflake ML’s distributed processing framework, which maximizes resource utilization by fully leveraging the available compute power. By default, this framework uses all GPUs on multi-GPU nodes, offering significant performance improvements compared to open-source packages and reduces overall runtime.

Machine learning workloads, including data loading, are executed in a Snowflake-managed compute environment. The framework allows dynamic scaling of resources based on the specific requirements of the task at hand, such as training models or loading data. The number of resources, including GPU and memory allocation for each task, can be easily configured through the provided APIs.

Optimized data loading¶

The Container Runtime provides a set of data connector APIs that enable connecting Snowflake data sources (including

tables, DataFrames, and Datasets) to popular ML frameworks such as PyTorch and TensorFlow, taking full advantage of

multiple cores or GPUs. Once loaded, the data can be processed using open source packages, or any of the Snowflake ML

APIs, including the distributed versions that are described below. These APIs are found in the snowflake.ml.data

namespace.

The snowflake.ml.data.data_connector.DataConnector class connects Snowpark DataFrames or Snowflake ML Datasets to

TensorFlow or PyTorch DataSets or Pandas DataFrames. Instantiate a connector using one of the following class methods:

DataConnector.from_dataframe: Accepts a Snowpark DataFrame.

DataConnector.from_dataset: Accepts a Snowflake ML Dataset, specified by name and version.

DataConnector.from_sources: Accepts list of sources, each of which can be a DataFrame or a Dataset.

Once you have instantiated the connector (calling the instance, for example, data_connector), call the following

methods to produce the desired kind of output.

data_connector.to_tf_dataset: Returns a TensorFlow Dataset suitable for use with TensorFlow.data_connector.to_torch_dataset: Returns a PyTorch Dataset suitable for use with PyTorch.

For more information on these APIs, see the Snowflake ML API reference.

Building with open source¶

With the foundational CPU and GPU images that come pre-populated with popular ML packages, and the flexibility to

install additional libraries using pip, users can employ familiar and innovative open source frameworks inside Snowflake

Notebooks, without moving data out of Snowflake. You can scale processing by using Snowflake’s distributed

APIs for data loading, training, and hyperparameter optimization, with the familiar APIs of popular OSS

packages, with small changes to the interface to allow for scaling configurations.

The following code illustrates creating an XGBoost classifier using these APIs:

from snowflake.snowpark.context import get_active_session

from snowflake.ml.data.data_connector import DataConnector

import pandas as pd

import xgboost as xgb

from sklearn.model_selection import train_test_split

session = get_active_session()

# Use the DataConnector API to pull in large data efficiently

df = session.table("my_dataset")

pandas_df = DataConnector.from_dataframe(df).to_pandas()

# Build with open source

X = df_pd[['feature1', 'feature2']]

y = df_pd['label']

# Split data into test and train in memory

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.15, random_state=34)

# Train in memory

model = xgb.XGBClassifier()

model.fit(X_train, y_train)

# Predict

y_pred = model.predict(X_test)

The CPU container runtime has different packages than the GPU container runtime. The following sections list the packages available within each container runtime.

Snowflake Runtime packages¶

Snowflake Runtime CPU packages¶

The following are the packages available for the Snowflake ML Runtime CPU version.

Package |

Version |

|---|---|

absl-py |

1.4.0 |

aiobotocore |

2.23.2 |

aiohappyeyeballs |

2.6.1 |

aiohttp |

3.12.15 |

aiohttp-cors |

0.8.1 |

aioitertools |

0.12.0 |

aiosignal |

1.4.0 |

altair |

5.5.0 |

annotated-types |

0.7.0 |

anyio |

4.10.0 |

appdirs |

1.4.4 |

arviz |

0.22.0 |

asn1crypto |

1.5.1 |

asttokens |

3.0.0 |

async-timeout |

5.0.1 |

attrs |

25.3.0 |

bayesian-optimization |

1.5.1 |

blinker |

1.9.0 |

boto3 |

1.39.8 |

botocore |

1.39.8 |

cachetools |

5.5.2 |

CausalPy |

0.5.0 |

certifi |

2025.8.3 |

cffi |

1.17.1 |

charset-normalizer |

3.4.3 |

click |

8.2.1 |

clikit |

0.6.2 |

cloudpickle |

3.0.0 |

cmdstanpy |

1.2.5 |

colorama |

0.4.6 |

colorful |

0.5.7 |

comm |

0.2.3 |

cons |

0.4.7 |

contourpy |

1.3.2 |

crashtest |

0.3.1 |

cryptography |

43.0.3 |

cycler |

0.12.1 |

datasets |

4.0.0 |

debugpy |

1.8.16 |

decorator |

5.2.1 |

Deprecated |

1.2.18 |

dill |

0.3.8 |

distlib |

0.4.0 |

etuples |

0.3.10 |

evaluate |

0.4.5 |

exceptiongroup |

1.3.0 |

executing |

2.2.0 |

fastapi |

0.116.1 |

filelock |

3.19.1 |

FLAML |

2.3.6 |

Flask |

3.1.2 |

fonttools |

4.59.2 |

frozenlist |

1.7.0 |

fsspec |

2025.3.0 |

gitdb |

4.0.12 |

GitPython |

3.1.45 |

google-api-core |

2.25.1 |

google-auth |

2.40.3 |

googleapis-common-protos |

1.70.0 |

graphviz |

0.21 |

grpcio |

1.74.0 |

grpcio-status |

1.62.3 |

grpcio-tools |

1.62.3 |

gunicorn |

23.0.0 |

h11 |

0.16.0 |

h5netcdf |

1.6.4 |

h5py |

3.14.0 |

hf-xet |

1.1.9 |

holidays |

0.79 |

httpcore |

1.0.9 |

httpstan |

4.13.0 |

httpx |

0.28.1 |

huggingface-hub |

0.34.4 |

hypothesis |

6.138.7 |

idna |

3.10 |

importlib_metadata |

8.0.0 |

importlib_resources |

6.5.2 |

ipykernel |

6.30.1 |

ipython |

8.37.0 |

itsdangerous |

2.2.0 |

JayDeBeApi |

1.2.3 |

jedi |

0.19.2 |

Jinja2 |

3.1.6 |

jmespath |

1.0.1 |

joblib |

1.5.2 |

jpype1 |

1.6.0 |

jsonschema |

4.25.1 |

jsonschema-specifications |

2025.4.1 |

jupyter_client |

8.6.3 |

jupyter_core |

5.8.1 |

kiwisolver |

1.4.9 |

lightgbm |

4.5.0 |

lightgbm-ray |

0.1.9 |

llvmlite |

0.44.0 |

logical-unification |

0.4.6 |

markdown-it-py |

4.0.0 |

MarkupSafe |

3.0.2 |

marshmallow |

3.26.1 |

matplotlib |

3.10.5 |

matplotlib-inline |

0.1.7 |

mdurl |

0.1.2 |

miniKanren |

1.0.5 |

mlruntimes_service |

1.8.0 |

modin |

0.35.0 |

mpmath |

1.3.0 |

msgpack |

1.1.1 |

multidict |

6.6.4 |

multipledispatch |

1.0.0 |

multiprocess |

0.70.16 |

narwhals |

2.2.0 |

nest-asyncio |

1.6.0 |

networkx |

3.4.2 |

nltk |

3.9.1 |

numba |

0.61.2 |

numpy |

1.26.4 |

nvidia-nccl-cu12 |

2.27.7 |

opencensus |

0.11.4 |

opencensus-context |

0.1.3 |

opentelemetry-api |

1.26.0 |

opentelemetry-exporter-otlp |

1.26.0 |

opentelemetry-exporter-otlp-proto-common |

1.26.0 |

opentelemetry-exporter-otlp-proto-grpc |

1.26.0 |

opentelemetry-exporter-otlp-proto-http |

1.26.0 |

opentelemetry-exporter-prometheus |

0.47b0 |

opentelemetry-proto |

1.26.0 |

opentelemetry-sdk |

1.26.0 |

opentelemetry-semantic-conventions |

0.47b0 |

packaging |

24.2 |

pandas |

2.3.2 |

parso |

0.8.5 |

pastel |

0.2.1 |

patsy |

1.0.1 |

pexpect |

4.9.0 |

pillow |

10.4.0 |

platformdirs |

4.4.0 |

plotly |

6.3.0 |

prometheus_client |

0.22.1 |

prompt_toolkit |

3.0.52 |

propcache |

0.3.2 |

prophet |

1.1.7 |

proto-plus |

1.26.1 |

protobuf |

4.25.8 |

psutil |

7.0.0 |

ptyprocess |

0.7.0 |

pure_eval |

0.2.3 |

py-spy |

0.4.1 |

py4j |

0.10.9.7 |

pyarrow |

21.0.0 |

pyasn1 |

0.6.1 |

pyasn1_modules |

0.4.2 |

pycparser |

2.22 |

pydantic |

2.11.7 |

pydantic-settings |

2.10.1 |

pydantic_core |

2.33.2 |

pydeck |

0.9.1 |

Pygments |

2.19.2 |

PyJWT |

2.10.1 |

pylev |

1.4.0 |

pymc |

5.25.1 |

pyOpenSSL |

25.1.0 |

pyparsing |

3.2.3 |

pysimdjson |

6.0.2 |

pystan |

3.10.0 |

pytensor |

2.31.7 |

python-dateutil |

2.9.0.post0 |

python-dotenv |

1.1.1 |

pytimeparse |

1.1.8 |

pytz |

2025.2 |

PyYAML |

6.0.2 |

pyzmq |

27.0.2 |

ray |

2.47.1 |

referencing |

0.36.2 |

regex |

2025.7.34 |

requests |

2.32.5 |

retrying |

1.4.2 |

rich |

13.9.4 |

rpds-py |

0.27.1 |

rsa |

4.9.1 |

s3fs |

2025.3.0 |

s3transfer |

0.13.1 |

safetensors |

0.6.2 |

scikit-learn |

1.5.2 |

scipy |

1.15.3 |

seaborn |

0.13.2 |

shap |

0.48.0 |

six |

1.17.0 |

slicer |

0.0.8 |

smart_open |

7.3.0.post1 |

smmap |

5.0.2 |

sniffio |

1.3.1 |

snowbooks |

1.76.7rc1 |

snowflake |

1.7.0 |

snowflake-connector-python |

3.17.2 |

snowflake-ml-python |

1.11.0 |

snowflake-snowpark-python |

1.37.0 |

snowflake-telemetry-python |

0.7.1 |

snowflake._legacy |

1.0.1 |

snowflake.core |

1.7.0 |

snowpark-connect |

0.20.3 |

sortedcontainers |

2.4.0 |

sqlglot |

27.9.0 |

sqlparse |

0.5.3 |

stack-data |

0.6.3 |

stanio |

0.5.1 |

starlette |

0.47.3 |

statsmodels |

0.14.5 |

streamlit |

1.39.1 |

sympy |

1.13.1 |

tenacity |

9.1.2 |

threadpoolctl |

3.6.0 |

tokenizers |

0.21.4 |

toml |

0.10.2 |

tomlkit |

0.13.3 |

toolz |

1.0.0 |

torch |

2.6.0+cpu |

torchvision |

0.21.0+cpu |

tornado |

6.5.2 |

tqdm |

4.67.1 |

traitlets |

5.14.3 |

transformers |

4.55.4 |

typing-inspection |

0.4.1 |

typing_extensions |

4.15.0 |

tzdata |

2025.2 |

tzlocal |

5.3.1 |

urllib3 |

2.5.0 |

uvicorn |

0.35.0 |

virtualenv |

20.34.0 |

watchdog |

5.0.3 |

wcwidth |

0.2.13 |

webargs |

8.7.0 |

Werkzeug |

3.1.3 |

wrapt |

1.17.3 |

xarray |

2025.6.1 |

xarray-einstats |

0.8.0 |

xgboost |

2.1.4 |

xgboost-ray |

0.1.19 |

xxhash |

3.5.0 |

yarl |

1.20.1 |

zipp |

3.23.0 |

Snowflake ML Runtime GPU packages¶

The following are the packages available for the Snowflake ML Runtime GPU version.

Package |

Version |

|---|---|

absl-py |

1.4.0 |

accelerate |

1.10.1 |

aiobotocore |

2.23.2 |

aiohappyeyeballs |

2.6.1 |

aiohttp |

3.12.15 |

aiohttp-cors |

0.8.1 |

aioitertools |

0.12.0 |

aiosignal |

1.4.0 |

airportsdata |

20250811 |

altair |

5.5.0 |

annotated-types |

0.7.0 |

anyio |

4.10.0 |

appdirs |

1.4.4 |

arviz |

0.22.0 |

asn1crypto |

1.5.1 |

astor |

0.8.1 |

asttokens |

3.0.0 |

async-timeout |

5.0.1 |

attrs |

25.3.0 |

bayesian-optimization |

1.5.1 |

blake3 |

1.0.5 |

blinker |

1.9.0 |

boto3 |

1.39.8 |

botocore |

1.39.8 |

cachetools |

5.5.2 |

CausalPy |

0.5.0 |

certifi |

2025.8.3 |

cffi |

1.17.1 |

charset-normalizer |

3.4.3 |

click |

8.2.1 |

clikit |

0.6.2 |

cloudpickle |

3.0.0 |

cmdstanpy |

1.2.5 |

colorama |

0.4.6 |

colorful |

0.5.7 |

comm |

0.2.3 |

compressed-tensors |

0.9.3 |

cons |

0.4.7 |

contourpy |

1.3.2 |

crashtest |

0.3.1 |

cryptography |

43.0.3 |

cuda-bindings |

12.9.2 |

cuda-pathfinder |

1.1.0 |

cuda-python |

12.9.2 |

cudf-cu12 |

25.6.0 |

cuml-cu12 |

25.6.0 |

cupy-cuda12x |

13.6.0 |

cuvs-cu12 |

25.6.1 |

cycler |

0.12.1 |

dask |

2025.5.0 |

dask-cuda |

25.6.0 |

dask-cudf-cu12 |

25.6.0 |

datasets |

4.0.0 |

debugpy |

1.8.16 |

decorator |

5.2.1 |

Deprecated |

1.2.18 |

depyf |

0.18.0 |

dill |

0.3.8 |

diskcache |

5.6.3 |

distlib |

0.4.0 |

distributed |

2025.5.0 |

distributed-ucxx-cu12 |

0.44.0 |

distro |

1.9.0 |

dnspython |

2.7.0 |

einops |

0.8.1 |

email-validator |

2.3.0 |

etuples |

0.3.10 |

evaluate |

0.4.5 |

exceptiongroup |

1.3.0 |

executing |

2.2.0 |

fastapi |

0.116.1 |

fastapi-cli |

0.0.8 |

fastapi-cloud-cli |

0.1.5 |

fastrlock |

0.8.3 |

filelock |

3.19.1 |

FLAML |

2.3.6 |

Flask |

3.1.2 |

fonttools |

4.59.2 |

frozenlist |

1.7.0 |

fsspec |

2025.3.0 |

gguf |

0.17.1 |

gitdb |

4.0.12 |

GitPython |

3.1.45 |

google-api-core |

2.25.1 |

google-auth |

2.40.3 |

googleapis-common-protos |

1.70.0 |

graphviz |

0.21 |

grpcio |

1.74.0 |

grpcio-status |

1.62.3 |

grpcio-tools |

1.62.3 |

gunicorn |

23.0.0 |

h11 |

0.16.0 |

h5netcdf |

1.6.4 |

h5py |

3.14.0 |

hf-xet |

1.1.9 |

holidays |

0.79 |

httpcore |

1.0.9 |

httpstan |

4.13.0 |

httptools |

0.6.4 |

httpx |

0.28.1 |

huggingface-hub |

0.34.4 |

hypothesis |

6.138.7 |

idna |

3.10 |

importlib_metadata |

8.0.0 |

importlib_resources |

6.5.2 |

interegular |

0.3.3 |

ipykernel |

6.30.1 |

ipython |

8.37.0 |

itsdangerous |

2.2.0 |

JayDeBeApi |

1.2.3 |

jedi |

0.19.2 |

Jinja2 |

3.1.6 |

jiter |

0.10.0 |

jmespath |

1.0.1 |

joblib |

1.5.2 |

jpype1 |

1.6.0 |

jsonschema |

4.25.1 |

jsonschema-specifications |

2025.4.1 |

jupyter_client |

8.6.3 |

jupyter_core |

5.8.1 |

kiwisolver |

1.4.9 |

lark |

1.2.2 |

libcudf-cu12 |

25.6.0 |

libcuml-cu12 |

25.6.0 |

libcuvs-cu12 |

25.6.1 |

libkvikio-cu12 |

25.6.0 |

libraft-cu12 |

25.6.0 |

librmm-cu12 |

25.6.0 |

libucx-cu12 |

1.18.1 |

libucxx-cu12 |

0.44.0 |

lightgbm |

4.5.0 |

lightgbm-ray |

0.1.9 |

llguidance |

0.7.30 |

llvmlite |

0.44.0 |

lm-format-enforcer |

0.10.12 |

locket |

1.0.0 |

logical-unification |

0.4.6 |

markdown-it-py |

4.0.0 |

MarkupSafe |

3.0.2 |

marshmallow |

3.26.1 |

matplotlib |

3.10.5 |

matplotlib-inline |

0.1.7 |

mdurl |

0.1.2 |

miniKanren |

1.0.5 |

mistral_common |

1.8.4 |

mlruntimes_service |

1.8.0 |

modin |

0.35.0 |

mpmath |

1.3.0 |

msgpack |

1.1.1 |

msgspec |

0.19.0 |

multidict |

6.6.4 |

multipledispatch |

1.0.0 |

multiprocess |

0.70.16 |

narwhals |

2.2.0 |

nest-asyncio |

1.6.0 |

networkx |

3.4.2 |

ninja |

1.13.0 |

nltk |

3.9.1 |

numba |

0.61.2 |

numba-cuda |

0.11.0 |

numpy |

1.26.4 |

nvidia-cublas-cu12 |

12.6.4.1 |

nvidia-cuda-cupti-cu12 |

12.6.80 |

nvidia-cuda-nvcc-cu12 |

12.9.86 |

nvidia-cuda-nvrtc-cu12 |

12.6.77 |

nvidia-cuda-runtime-cu12 |

12.6.77 |

nvidia-cudnn-cu12 |

9.5.1.17 |

nvidia-cufft-cu12 |

11.3.0.4 |

nvidia-curand-cu12 |

10.3.7.77 |

nvidia-cusolver-cu12 |

11.7.1.2 |

nvidia-cusparse-cu12 |

12.5.4.2 |

nvidia-cusparselt-cu12 |

0.6.3 |

nvidia-ml-py |

12.575.51 |

nvidia-nccl-cu12 |

2.21.5 |

nvidia-nvjitlink-cu12 |

12.6.85 |

nvidia-nvtx-cu12 |

12.6.77 |

nvtx |

0.2.13 |

openai |

1.102.0 |

opencensus |

0.11.4 |

opencensus-context |

0.1.3 |

opencv-python-headless |

4.11.0.86 |

opentelemetry-api |

1.26.0 |

opentelemetry-exporter-otlp |

1.26.0 |

opentelemetry-exporter-otlp-proto-common |

1.26.0 |

opentelemetry-exporter-otlp-proto-grpc |

1.26.0 |

opentelemetry-exporter-otlp-proto-http |

1.26.0 |

opentelemetry-exporter-prometheus |

0.47b0 |

opentelemetry-proto |

1.26.0 |

opentelemetry-sdk |

1.26.0 |

opentelemetry-semantic-conventions |

0.47b0 |

opentelemetry-semantic-conventions-ai |

0.4.13 |

outlines |

0.1.11 |

outlines_core |

0.1.26 |

packaging |

24.2 |

pandas |

2.2.3 |

parso |

0.8.5 |

partd |

1.4.2 |

partial-json-parser |

0.2.1.1.post6 |

pastel |

0.2.1 |

patsy |

1.0.1 |

peft |

0.17.1 |

pexpect |

4.9.0 |

pillow |

10.4.0 |

platformdirs |

4.4.0 |

plotly |

6.3.0 |

prometheus-fastapi-instrumentator |

7.1.0 |

prometheus_client |

0.22.1 |

prompt_toolkit |

3.0.52 |

propcache |

0.3.2 |

prophet |

1.1.7 |

proto-plus |

1.26.1 |

protobuf |

4.25.8 |

psutil |

7.0.0 |

ptyprocess |

0.7.0 |

pure_eval |

0.2.3 |

py-cpuinfo |

9.0.0 |

py-spy |

0.4.1 |

py4j |

0.10.9.7 |

pyarrow |

19.0.1 |

pyasn1 |

0.6.1 |

pyasn1_modules |

0.4.2 |

pycountry |

24.6.1 |

pycparser |

2.22 |

pydantic |

2.11.7 |

pydantic-extra-types |

2.10.5 |

pydantic-settings |

2.10.1 |

pydantic_core |

2.33.2 |

pydeck |

0.9.1 |

Pygments |

2.19.2 |

PyJWT |

2.10.1 |

pylev |

1.4.0 |

pylibcudf-cu12 |

25.6.0 |

pylibraft-cu12 |

25.6.0 |

pymc |

5.25.1 |

pynvjitlink-cu12 |

0.7.0 |

pynvml |

12.0.0 |

pyOpenSSL |

25.1.0 |

pyparsing |

3.2.3 |

pysimdjson |

6.0.2 |

pystan |

3.10.0 |

pytensor |

2.31.7 |

python-dateutil |

2.9.0.post0 |

python-dotenv |

1.1.1 |

python-json-logger |

3.3.0 |

python-multipart |

0.0.20 |

pytimeparse |

1.1.8 |

pytz |

2025.2 |

PyYAML |

6.0.2 |

pyzmq |

27.0.2 |

raft-dask-cu12 |

25.6.0 |

rapids-dask-dependency |

25.6.0 |

rapids-logger |

0.1.1 |

ray |

2.47.1 |

referencing |

0.36.2 |

regex |

2025.7.34 |

requests |

2.32.5 |

retrying |

1.4.2 |

rich |

13.9.4 |

rich-toolkit |

0.15.0 |

rignore |

0.6.4 |

rmm-cu12 |

25.6.0 |

rpds-py |

0.27.1 |

rsa |

4.9.1 |

s3fs |

2025.3.0 |

s3transfer |

0.13.1 |

safetensors |

0.6.2 |

scikit-learn |

1.5.2 |

scipy |

1.15.3 |

seaborn |

0.13.2 |

sentencepiece |

0.2.1 |

sentry-sdk |

2.35.1 |

shap |

0.48.0 |

shellingham |

1.5.4 |

six |

1.17.0 |

slicer |

0.0.8 |

smart_open |

7.3.0.post1 |

smmap |

5.0.2 |

sniffio |

1.3.1 |

snowbooks |

1.76.7rc1 |

snowflake |

1.7.0 |

snowflake-connector-python |

3.17.2 |

snowflake-ml-python |

1.11.0 |

snowflake-snowpark-python |

1.37.0 |

snowflake-telemetry-python |

0.7.1 |

snowflake._legacy |

1.0.1 |

snowflake.core |

1.7.0 |

snowpark-connect |

0.20.3 |

sortedcontainers |

2.4.0 |

sqlglot |

27.9.0 |

sqlparse |

0.5.3 |

stack-data |

0.6.3 |

stanio |

0.5.1 |

starlette |

0.47.3 |

statsmodels |

0.14.5 |

streamlit |

1.39.1 |

sympy |

1.13.1 |

tblib |

3.1.0 |

tenacity |

9.1.2 |

threadpoolctl |

3.6.0 |

tiktoken |

0.11.0 |

tokenizers |

0.21.4 |

toml |

0.10.2 |

tomlkit |

0.13.3 |

toolz |

1.0.0 |

torch |

2.6.0+cu126 |

torchaudio |

2.6.0+cu126 |

torchvision |

0.21.0+cu126 |

tornado |

6.5.2 |

tqdm |

4.67.1 |

traitlets |

5.14.3 |

transformers |

4.51.3 |

treelite |

4.4.1 |

triton |

3.2.0 |

typer |

0.16.1 |

typing-inspection |

0.4.1 |

typing_extensions |

4.15.0 |

tzdata |

2025.2 |

tzlocal |

5.3.1 |

ucx-py-cu12 |

0.44.0 |

ucxx-cu12 |

0.44.0 |

urllib3 |

2.5.0 |

uvicorn |

0.35.0 |

uvloop |

0.21.0 |

virtualenv |

20.34.0 |

vllm |

0.8.5.post1 |

watchdog |

5.0.3 |

watchfiles |

1.1.0 |

wcwidth |

0.2.13 |

webargs |

8.7.0 |

websockets |

15.0.1 |

Werkzeug |

3.1.3 |

wrapt |

1.17.3 |

xarray |

2025.6.1 |

xarray-einstats |

0.8.0 |

xformers |

0.0.29.post2 |

xgboost |

2.1.4 |

xgboost-ray |

0.1.19 |

xgrammar |

0.1.18 |

xxhash |

3.5.0 |

yarl |

1.20.1 |

zict |

3.0.0 |

zipp |

3.23.0 |

Optimized training¶

Container Runtime offers a set of distributed training APIs, including distributed versions of LightGBM, PyTorch,

and XGBoost, that take full advantage of the available resources in the container environment. These are found in the

snowflake.ml.modeling.distributors namespace. The APIs of the distributed classes are similar to those of the

standard versions.

For more information on these APIs, see the API reference.

XGBoost¶

The primary XGBoost class is snowflake.ml.modeling.distributors.xgboost.XGBEstimator. Related classes include:

snowflake.ml.modeling.distributors.xgboost.XGBScalingConfig

For an example of working with this API, see the XGBoost on GPU example notebook in the Snowflake Container Runtime GitHub repository.

LightGBM¶

The primary LightGBM class is snowflake.ml.modeling.distributors.lightgbm.LightGBMEstimator. Related classes include:

snowflake.ml.modeling.distributors.lightgbm.LightGBMScalingConfig

For an example of working with this API, see the LightGBM on GPU example notebook in the Snowflake Container Runtime GitHub repository.

PyTorch¶

The primary PyTorch class is snowflake.ml.modeling.distributors.pytorch.PyTorchDistributor. Related classes and functions include:

snowflake.ml.modeling.distributors.pytorch.WorkerResourceConfigsnowflake.ml.modeling.distributors.pytorch.PyTorchScalingConfigsnowflake.ml.modeling.distributors.pytorch.Contextsnowflake.ml.modeling.distributors.pytorch.get_context

For an example of working with this API, see the PyTorch on GPU example notebook in the Snowflake Container Runtime GitHub repository.

Next steps¶

To try the notebook using Container Runtime, see Notebooks on Container Runtime.