Model Serving in Snowpark Container Services¶

Note

This feature is available in AWS, Azure and Google Cloud commercial regions. It isn’t available in government or commercial government regions.

Note

The ability to run models in Snowpark Container Services (SPCS) described in this topic is available in

snowflake-ml-python version 1.8.0 and later.

The Snowflake Model Registry allows you to run models either in a warehouse (the default), or in a Snowpark Container Services (SPCS) compute pool through Model Serving. Running models in a warehouse imposes a few limitations on the size and kinds of models you can use (specifically, small-to-medium size CPU-only models whose dependencies can be satisfied by packages available in the Snowflake conda channel).

Running models on Snowpark Container Services (SPCS) eases these restrictions, or eliminates them entirely. You can use any packages you want, including those from the Python Package Index (PyPI) or other sources. Large models can be run on distributed clusters of GPUs. And you don’t need to know anything about container technologies, such as Docker or Kubernetes. Snowflake Model Serving takes care of all the details.

Key concepts¶

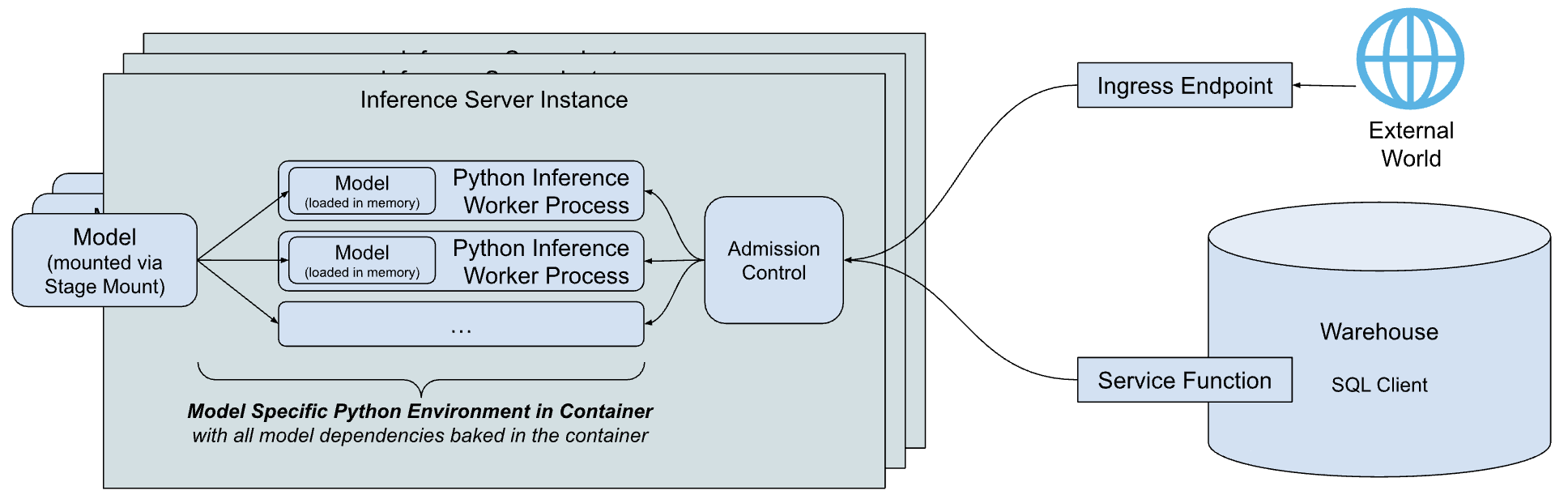

A simplified high-level overview of the Snowflake Model Serving inference architecture is shown below.

The main components of the architecture are:

Inference server: The server that runs the model and serves predictions. The inference server can use multiple inference processes to fully utilize the node’s capabilities. Requests to the model are dispatched by admission control, which manages the incoming request queue to avoid out-of-memory conditions, rejecting clients when the server is overloaded. Today, Snowflake provides a simple and flexible Python-based inference server that can run inference for all types of models. Over time, Snowflake plans to offer inference servers optimized for specific model types.

Model-specific Python environment: To reduce the latency of starting a model, which includes the time required to download dependencies and load the model, Snowflake builds a container that encapsulates the dependencies of the specific model.

Service functions: To talk to the inference server from code running in a warehouse, Snowflake Model Serving builds functions that have the same signature as the model, but which instead call the inference server via the external function protocol.

Ingress endpoint: To allow applications outside Snowflake to call the model, Snowflake Model Serving can provision an optional HTTP endpoint, accessible to the public Internet.

How does it work?¶

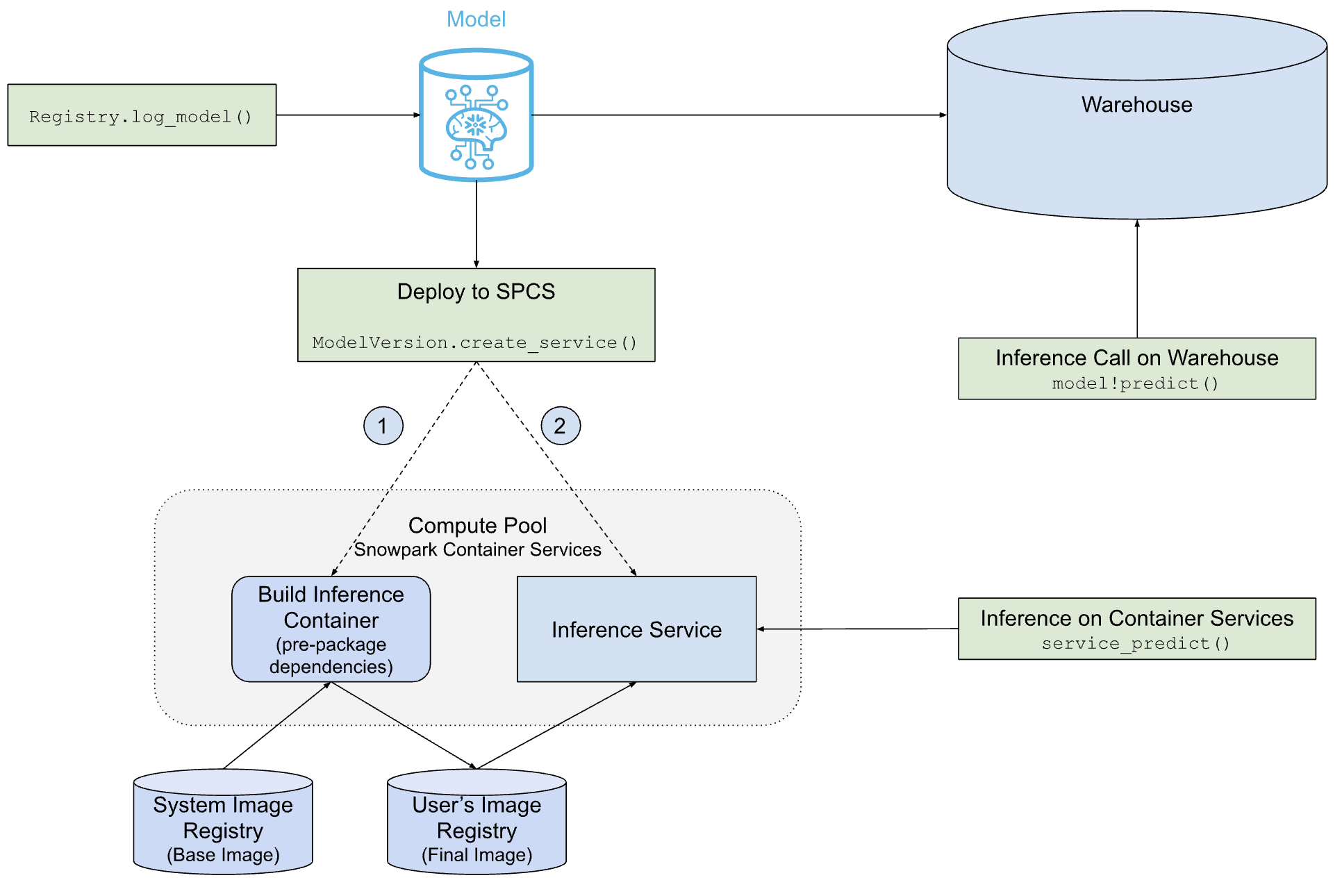

The following diagram shows how the Snowflake Model Serving deploys and serves models in either a warehouse or on SPCS.

As you can see, the path to SPCS deployment is more complex than the path to warehouse deployment, but Snowflake Model Serving does all the work for you, including building the container image that holds the model and its dependencies, and creating the inference server that runs the model.

Prerequisites¶

Before you begin, make sure you have the following:

A Snowflake account in any commercial AWS, Azure or Google Cloud region. Government regions are not supported.

Version 1.6.4 or later of the

snowflake-ml-pythonPython package.A model you want to run on Snowpark Container Services.

Familiarity with the Snowflake Model Registry.

Familiarity with the Snowpark Container Services. In particular, you should understand compute pools, image repositories, and related privileges.

Create a compute pool¶

Snowpark Container Services (SPCS) runs container images in compute pools. If you don’t already have a suitable compute pool, create one as follows:

CREATE COMPUTE POOL IF NOT EXISTS mypool

MIN_NODES = 2

MAX_NODES = 4

INSTANCE_FAMILY = 'CPU_X64_M'

AUTO_RESUME = TRUE;

See the family names table for a list of valid instance families.

Make sure the role that will run the model is the owner of the compute pool or else has the USAGE or OPERATE privilege on the pool.

Required privileges¶

Model Serving runs on top of Snowpark Container Services. To use Model Serving, a user needs the following privileges:

USAGE or OWNERSHIP on a compute pool where the service will run.

If an ingress endpoint is desired on the model serving, the user must have the BIND SERVICE ENDPOINT privilege on account.

Model owners or users with the READ privilege on the model can deploy the model to Serving. To enable another role to access inference, service owners can grant the service role

INFERENCE_SERVICE_FUNCTION_USAGEto share service functions and grantALL_ENDPOINTS_USAGEto share ingress endpoints.

Limitations¶

The following limitations apply to model serving in Snowpark Container Services.

Only the owner of a model or roles with the READ privilege on the model can deploy it to Snowpark Container Services.

Image building fails if it takes more than an hour.

Table functions are not supported. Models with no non-table functions cannot currently be deployed to Snowpark Container Services.

Deploying a model to SPCS¶

Either log a new model version (using reg.log_model)

or obtain a reference to an existing model version (reg.get_model(...).version(...)).

In either situation, you end up with a reference to a ModelVersion object.

Model dependencies and eligibility¶

A model’s dependencies determine if it can run in a warehouse, in a SPCS service, or both. You can, if necessary, intentionally specify dependencies to make a model ineligible to run in one of these environments.

The Snowflake conda channel is available only in warehouses and is the only source for warehouse dependencies. By default, conda dependencies for SPCS models obtain their dependencies from conda-forge.

When you log a model version, the model’s conda dependencies are validated against the Snowflake conda channel. If all the model’s conda dependencies are available there, the model is deemed eligible to run in a warehouse. It may also be eligible to run in a SPCS service if all of its dependencies are available from conda-forge, although this is not checked until you create a service.

Models logged with PyPI dependencies must be run on SPCS. Specifying at least one PyPI dependency is one way to make a model ineligible to run in a warehouse. If your model has only conda dependencies, specify at least one with an explicit channel (even conda-forge), as shown in the following example.

# reg is a snowflake.ml.registry.Registry object

reg.log_model(

model_name="my_model",

version_name="v1",

model=model,

conda_dependencies=["conda-forge::scikit-learn"])

For SPCS-deployed models, conda dependencies, if any, are installed first, then any PyPI dependencies are installed in

the conda environment using pip.

Create a service¶

To create a SPCS service and deploy the model to it, call the model version’s create_service method, as shown in

the following example.

# mv is a snowflake.ml.model.ModelVersion object

mv.create_service(service_name="myservice",

service_compute_pool="my_compute_pool",

ingress_enabled=True,

gpu_requests=None)

The following the required arguments to create_service:

service_name: The name of the service to create. This name must be unique within the account.service_compute_pool: The name of the compute pool to use to run the model. The compute pool must already exist.ingress_enabled: If True, the service is made accessible via an HTTP endpoint. To create the endpoint, the user must have the BIND SERVICE ENDPOINT privilege.gpu_requests: A string specifying the number of GPUs. For a model that can be run on either CPU or GPU, this argument determines whether the model will be run on the CPU or on the GPUs. If the model is of a known type that can only be run on CPU (for example, scikit-learn models), the image build fails if GPUs are requested.

If you’re deploying a new model, it can take up to 5 minutes to create the service for CPU-powered models and 10 minutes for GPU-powered models. If the compute pool is idle or requires resizing, it might take longer to create the service.

This example shows only the required and most commonly used arguments. See the ModelVersion API reference for a complete list of arguments.

Default service configuration¶

Out of the box, Inference Server uses easy-to-use defaults that work for most use-cases. Those settings are:

Number of Worker Threads: For a CPU-powered model, the server uses twice the number of CPUs plus one worker processes. GPU-powered models use one worker process. You can override this using the

num_workersargument in thecreate_servicecall.Thread Safety: Some models are not thread-safe. Therefore, the service loads a separate copy of the model for each worker process. This can result in resource depletion for large models.

Node Utilization: By default, one inference server instance requests the whole node by requesting all the CPU and memory of the node it runs on. To customize resource allocation per instance, use arguments like

cpu_requests,memory_requests, andgpu_requests.Endpoint: The inference endpoint is named

inferenceand uses port 5000. These cannot be customized.

Container image build behavior¶

By default, Snowflake Model Serving builds the container image using the same compute pool that will be used to run

the model. This inference compute pool is likely overpowered for this task (for example, GPUs are not used in building

container images). In most cases, this won’t have a significant impact on compute costs, but if it is a concern, you

can choose a less powerful compute pool for building images by specifying the image_build_compute_pool argument.

create_service is an idempotent function. Calling it multiple times does not trigger image building every time.

However, container images may be rebuilt based on updates in the inference service, including fixes for

vulnerabilities in dependent packages. When this happens, create_service automatically triggers a rebuild

of the image.

Note

Models developed using Snowpark ML modeling classes cannot be deployed to environments that have a GPU. As a workaround, you can extract the native model and deploy that. For example:

# Train a model using Snowpark ML from snowflake.ml.modeling.xgboost import XGBRegressor regressor = XGBRegressor(...) regressor.fit(training_df) # Extract the native model xgb_model = regressor.to_xgboost() # Test the model with pandas dataframe pandas_test_df = test_df.select(['FEATURE1', 'FEATURE2', ...]).to_pandas() xgb_model.predict(pandas_test_df) # Log the model in Snowflake Model Registry mv = reg.log_model(xgb_model, model_name="my_native_xgb_model", sample_input_data=pandas_test_df, comment = 'A native XGB model trained from Snowflake Modeling API', ) # Now we should be able to deploy to a GPU compute pool on SPCS mv.create_service( service_name="my_service_gpu", service_compute_pool="my_gpu_pool", image_repo="my_repo", max_instances=1, gpu_requests="1", )

User interface¶

You can manage deployed models in the Model Registry Snowsight UI. For more information, see Model inference services.

Using a model deployed to SPCS¶

You can call a model’s methods using SQL, Python, or an HTTP endpoint.

SQL¶

Snowflake Model Serving creates service functions when deploying a model to SPCS. These functions serve as a bridge

from SQL to the model running in the SPCS compute pool. One service function is created for each method of the model,

and they are named like service_name!method_name. For example, if the model has two methods named PREDICT

and EXPLAIN and is being deployed to a service named MY_SERVICE, the resulting service functions are

MY_SERVICE!PREDICT and MY_SERVICE!EXPLAIN.

Note

Service functions are contained within the service. For this reason, they have only a single point of access control, the service. You can’t have different access control privileges for different functions in a single service.

Calling a model’s service functions in SQL is done using code like the following:

-- See signature of the inference function in SQL.

SHOW FUNCTIONS IN SERVICE MY_SERVICE;

-- Call the inference function in SQL following the same signature (from `arguments` column of the above query)

SELECT MY_SERVICE!PREDICT(feature1, feature2, ...) FROM input_data_table;

Python¶

Call a service’s methods using the run method of a model version object, including the service_name argument

to specify the service where the method will run. For example:

# Get signature of the inference function in Python

# mv: snowflake.ml.model.ModelVersion

mv.show_functions()

# Call the function in Python

service_prediction = mv.run(

test_df,

function_name="predict",

service_name="my_service")

If you do not include the service_name argument, the model runs in a warehouse.

HTTP endpoints¶

Every service comes with its internal DNS name. Deploying a service with ingress_enabled also creates a public HTTP endpoint available outside of Snowflake. Either endpoint can be used to call a service.

Endpoint names¶

You can find the public HTTP endpoint of a service with ingress enabled using the SHOW ENDPOINTS command or the list_services() method of a model version object.

# mv: snowflake.ml.model.ModelVersion

mv.list_services()

The output of both contains an ingress_url column, which has an entry of the format unique-service-id-account-id.snowflakecomputing.app.

This is the publicly available HTTP endpoint for your service. The DNS name limitations apply.

In a URL, underscores (_) in the method name are replaced by dashes (-) in the URL. For example, the service name predict_probability is changed to predict-probability in the URL.

To get the internal DNS name on Snowflake, use the DESCRIBE SERVICE command. The dns_name column of output from this command contains a service’s internal DNS name. To find your service’s port, use the SHOW ENDPOINTS IN SERVICE command. The port or port_range column contains the port used by a service. You can make internal calls to your service through the URL https://dns_name:port/<method_name>.

Request body¶

All inference requests must use the POST HTTP method with a Content-Type: application/json header, and the message body follows the data format described in Remote service input and output data formats.

The first column of your input is an INTEGER number representing the row number in the batch, and the remaining columns must match your service’s signature. The following request body is an example for a model with the signature predict(INTEGER, VARCHAR, TIMESTAMP):

{

"data": [

[0, 10, "Alex", "2014-01-01 16:00:00"],

[1, 20, "Steve", "2015-01-01 16:00:00"],

[2, 30, "Alice", "2016-01-01 16:00:00"],

[3, 40, "Adrian", "2017-01-01 16:00:00"]

]

}

Calling the service¶

Users can call a service using the public endpoint programmatically in the following ways:

With a Programmatic Access Token (PAT), authentication based on a token you generate on Snowflake. For information on setting up a PAT for use with Snowflake services, see Using programmatic access tokens for authentication. To use a PAT when calling a service, include the token in your

Authorizationrequest header:Authorization: Snowflake Token="<your_token>"

For example, with a PAT stored in the environment variable

SNOWFLAKE_SERVICE_PATand thepredict(INTEGER, VARCHAR, TIMESTAMP)function signature used in previous examples, you could make a request to the endpointrandom-id.myaccount.snowflakecomputing.app/predictwith the followingcurlcommand:curl \ -X POST \ -H "Content-Type: application/json" \ -H "Authorization: Snowflake Token=\"$SNOWFLAKE_SERVICE_PAT\"" \ -d '{ "data": [ [0, 10, "Alex", "2014-01-01 16:00:00"], [1, 20, "Steve", "2015-01-01 16:00:00"], [2, 30, "Alice", "2016-01-01 16:00:00"], [3, 40, "Adrian", "2017-01-01 16:00:00"] ] }' \ random-id.myaccount.snowflakecomputing.app/predict/predict

With a JSON Web Token (JWT), generated after key-pair authentication. Your application authenticates requests to the public endpoint by generating a JWT from your key-pair, exchanging it with Snowflake for an OAuth token, and then that OAuth token is used to authenticate with the public endpoint directly.

For an example of using JWT to authenticate with a public endpoint, see Snowpark Container Services Tutorial – Access the public endpoint programmatically.

For more information on key-pair authentication in Snowflake, see Key-pair authentication and key-pair rotation.

Note

There are no side effects when calling the service over HTTP. All authorization failures such as an incorrect token or lack of network route to the service result in a 404 error. The service may return 400 or 429 errors when the request body is invalid or the service is busy.

See Deploy a Hugging Face sentence transformer for GPU-powered inference for an example of using a model service HTTP endpoint.

Latency considerations¶

You can use the following options to run inference while hosting a model in Snowpark Container Services. They have different trade-offs with regard to latency expectation and use cases.

HTTP endpoints

HTTP endpoints are best for online or real-time inference and offer the lowest latency of your options. Traffic doesn’t go through a warehouse or global Snowflake services, and input data is directly sent to the server after authentication.

SQL

SQL commands are best for batch inference. Input data is sent to the server from a warehouse, which compiles and executes the query. Response data is then written to a cache in the warehouse before being returned to the server. Warehouse transfers, compilation, and execution incur latency. Execution latency is always lower when a cached result is used.

Snowpark Python APIs

Python APIs are backed by SQL commands executed on Snowflake and best-suited for the same purposes as direct SQL. However, latency is affected by the type of

DataFrameused as input. Snowpark DataFrames use data already available in Snowflake and can operate entirely in the same manner as direct SQL. Pandas DataFrames upload their in-memory data to a temporary Snowflake table, which incurs transfer latency.For the lowest latency when using Python APIs, prepare your pandas DataFrames as Snowpark DataFrames before hosting your model.

Note

Performance evaluations you conduct locally using a pandas DataFrame won’t match performance with the same pandas DataFrame that is running on Snowpark Container Services, due to the latency of temporary table creation. For an accurate performance evaluation of your model, use a Snowpark DataFrame as input.

Managing services¶

Snowpark Container Services offers a SQL interface for managing services. You can use the DESCRIBE SERVICE and ALTER SERVICE commands with SPCS services created by Snowflake Model Serving just as you would for managing any other SPCS service. For example, you can:

Change MIN_INSTANCES and other properties of a service

Drop (delete) a service

Share a service to another account

Change ownership of a service (the new owner must have READ access to the model)

Note

If the owner of a service loses access to the underlying model for any reason, the service stops working after a restart. It will continue running until it is restarted.

To ensure reproducibility and debugability, you cannot change the specification of an existing inference service. You can, however, copy the specification, customize it, and use the customized specification to create your own service to host the model. However, this method does not protect the underlying model from being deleted. Furthermore, it does not track lineage. It is best to allow Snowflake Model Serving to create services.

Scaling services¶

Starting with snowflake-ml-python 1.25.0, you can define the scaling boundaries for your inference service by setting min_instances

and max_instances within the create_service method.

How Autoscaling Works¶

The service initializes with the number of nodes specified in min_instances and dynamically scales within your defined range based on

real-time traffic volume and hardware utilization.

Scale-to-Zero (Auto-Suspend): If min_instances is set to 0 (the default), the service will automatically suspend if no traffic is

detected for 30 minutes.

Scaling Latency: Scaling triggers typically activate after one minute of meeting the required condition. Note that total spin-up time includes this trigger period plus the time required to provision and initialize new service instances.

Best Practices: Snowflake recommends the following configuration strategy:

min_instances: Set this to a value that covers your baseline performance requirements for typical workloads. This ensures immediate availability and avoids cold-start delays.max_instances: Set this to accommodate your peak workload demands while maintaining a ceiling on resource consumption.

Suspending services¶

The default min_instances=0 setting allows the service to auto-suspend after 30 minutes of inactivity. Incoming requests will trigger a resume,

with the total delay determined by compute pool availability and the model’s loading time (startup delay).

To manually suspend or resume a service, use the ALTER SERVICE command.

ALTER SERVICE my_service [ SUSPEND | RESUME ];

Deleting models¶

You can manage models and model versions as usual with either the SQL interface or the Python API, with the restriction that a model or model version that is being used by a service (whether running or suspended) cannot be dropped (deleted). To drop a model or model version, drop the service first.

Examples¶

These examples assume you have already created a compute pool, an image repository, and have granted privileges as needed. See Prerequisites for details.

Deploy an XGBoost model for CPU-powered inference¶

The following code illustrates the key steps in deploying an XGBoost model for inference in SPCS, then using the deployed model for inference. A notebook for this example is available.

from snowflake.ml.registry import registry

from snowflake.ml.utils.connection_params import SnowflakeLoginOptions

from snowflake.snowpark import Session

from xgboost import XGBRegressor

# your model training code here output of which is a trained xgb_model

# Open model registry

reg = registry.Registry(session=session, database_name='my_registry_db', schema_name='my_registry_schema')

# Log the model in Snowflake Model Registry

model_ref = reg.log_model(

model_name="my_xgb_forecasting_model",

version_name="v1",

model=xgb_model,

conda_dependencies=["scikit-learn","xgboost"],

sample_input_data=pandas_test_df,

comment="XGBoost model for forecasting customer demand"

)

# Deploy the model to SPCS

model_ref.create_service(

service_name="forecast_model_service",

service_compute_pool="my_cpu_pool",

ingress_enabled=True)

# See all services running a model

model_ref.list_services()

# Run on SPCS

model_ref.run(pandas_test_df, function_name="predict", service_name="forecast_model_service")

# Delete the service

model_ref.delete_service("forecast_model_service")

Since this model has ingress enabled, you can call its public HTTP endpoint. The following example uses a PAT stored in the environment variable PAT_TOKEN to authenticate with a public Snowflake endpoint:

import os

import json

import numpy as np

from pprint import pprint

import requests

def get_headers(pat_token):

headers = {'Authorization': f'Snowflake Token="{pat_token}"'}

return headers

headers = get_headers(os.getenv("PAT_TOKEN"))

# Put the endpoint url with method name `predict`

# The endpoint url can be found with `show endpoints in service <service_name>`.

URL = 'https://<random_str>-<organization>-<account>.snowflakecomputing.app/predict'

# Prepare data to be sent

data = {"data": np.column_stack([range(pandas_test_df.shape[0]), pandas_test_df.values]).tolist()}

# Send over HTTP

def send_request(data: dict):

output = requests.post(URL, json=data, headers=headers)

assert (output.status_code == 200), f"Failed to get response from the service. Status code: {output.status_code}"

return output.content

# Test

results = send_request(data=data)

print(json.loads(results))

Deploy a Hugging Face sentence transformer for GPU-powered inference¶

The following code trains and deploys a Hugging Face sentence transformer, including an HTTP endpoint.

This example requires the sentence-transformers package, a GPU compute pool and an image repository.

from snowflake.ml.registry import registry

from snowflake.ml.utils.connection_params import SnowflakeLoginOptions

from snowflake.snowpark import Session

from sentence_transformers import SentenceTransformer

session = Session.builder.configs(SnowflakeLoginOptions("connection_name")).create()

reg = registry.Registry(session=session, database_name='my_registry_db', schema_name='my_registry_schema')

# Take an example sentence transformer from HF

embed_model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

# Have some sample input data

input_data = [

"This is the first sentence.",

"Here's another sentence for testing.",

"The quick brown fox jumps over the lazy dog.",

"I love coding and programming.",

"Machine learning is an exciting field.",

"Python is a popular programming language.",

"I enjoy working with data.",

"Deep learning models are powerful.",

"Natural language processing is fascinating.",

"I want to improve my NLP skills.",

]

# Log the model with pip dependencies

pip_model = reg.log_model(

embed_model,

model_name="sentence_transformer_minilm",

version_name="pip",

sample_input_data=input_data, # Needed for determining signature of the model

pip_requirements=["sentence-transformers", "torch", "transformers"], # If you want to run this model in the Warehouse, you can use conda_dependencies instead

)

# Force Snowflake to not try to check warehouse

conda_forge_model = reg.log_model(

embed_model,

model_name="sentence_transformer_minilm",

version_name="conda_forge_force",

sample_input_data=input_data,

# setting any package from conda-forge is sufficient to know that it can't be run in warehouse

conda_dependencies=["sentence-transformers", "conda-forge::pytorch", "transformers"]

)

# Deploy the model to SPCS

pip_model.create_service(

service_name="my_minilm_service",

service_compute_pool="my_gpu_pool", # Using GPU_NV_S - smallest GPU node that can run the model

ingress_enabled=True,

gpu_requests="1", # Model fits in GPU memory; only needed for GPU pool

max_instances=4, # 4 instances were able to run 10M inferences from an XS warehouse

)

# See all services running a model

pip_model.list_services()

# Run on SPCS

pip_model.run(input_data, function_name="encode", service_name="my_minilm_service")

# Delete the service

pip_model.delete_service("my_minilm_service")

In SQL, you can call the service function as follows:

SELECT my_minilm_service!encode('This is a test sentence.');

Similarly, you can call its HTTP endpoint as follows.

import json

from pprint import pprint

import requests

# Put the endpoint url with method name `encode`

URL='https://<random_str>-<account>.snowflakecomputing.app/encode'

# Prepare data to be sent

data = {

'data': []

}

for idx, x in enumerate(input_data):

data['data'].append([idx, x])

# Send over HTTP

def send_request(data: dict):

output = requests.post(URL, json=data, headers=headers)

assert (output.status_code == 200), f"Failed to get response from the service. Status code: {output.status_code}"

return output.content

# Test

results = send_request(data=data)

pprint(json.loads(results))

Deploy a PyTorch model for GPU-powered inference¶

See this quickstart for an example of training and deploying a PyTorch deep learning recommendation model (DLRM) to SPCS for GPU inference.

Best practices¶

- Sharing the image repository

It is common for multiple users or roles to use the same model. Using a single image repository allows the image to be built once and reused by all users, saving time and expense. All roles that will use the repo need the SERVICE READ, SERVICE WRITE, READ, and WRITE privileges on the repo. Since the image might need to be rebuilt to update dependencies, you should keep the write privileges; don’t revoke them after the image is initially built.

- Choosing node type and number of instances

Use the smallest GPU node where the model fits into memory. Scale by increasing the number of instances, as opposed to increasing

num_workersin a larger GPU node. For example, if the model fits in the GPU_NV_S (GPU_NV_SM on Azure) instance type, usegpu_requests=1and scale up by increasingmax_instancesrather than using a combination ofgpu_requestsandnum_workerson a larger GPU instance.- Choosing warehouse size

The larger the warehouse is, the more parallel requests are sent to inference servers. Inference is an expensive operation, so use a smaller warehouse where possible. Using warehouse size larger than medium does not accelerate query performance and incurs additional cost.

Troubleshooting¶

Monitor SPCS deployments¶

You can monitor deployment by inspecting the services being launched using the following SQL query.

SHOW SERVICES IN COMPUTE POOL my_compute_pool;

Two jobs are launched:

MODEL_BUILD_xxxxx: The final characters of the name are randomized to avoid name conflicts. This job builds the image and ends after the image has been built. If an image already exists, the job is skipped.

The logs are useful for debugging issues such as conflicts in package dependencies. To see the logs from this job, run the SQL below, being sure to use the same final characters:

CALL SYSTEM$GET_SERVICE_LOGS('MODEL_BUILD_xxxxx', 0, 'model-build');MYSERVICE: The name of the service as specified in the call to

create_service. This job is started if the MODEL_BUILD job is successful or skipped. To see the logs from this job, run the SQL below:CALL SYSTEM$GET_SERVICE_LOGS('MYSERVICE', 0, 'model-inference');If logs are not available via

SYSTEM$GET_SERVICE_LOGbecause the build job or service has been deleted, you can check the event table (if enabled) to see the logs:SELECT RESOURCE_ATTRIBUTES, VALUE FROM <EVENT_TABLE_NAME> WHERE true AND timestamp > dateadd(day, -1, current_timestamp()) -- choose appropriate timestamp range AND RESOURCE_ATTRIBUTES:"snow.database.name" = '<db of the service>' AND RESOURCE_ATTRIBUTES:"snow.schema.name" = '<schema of the service>' AND RESOURCE_ATTRIBUTES:"snow.service.name" = '<Job or Service name>' AND RESOURCE_ATTRIBUTES:"snow.service.container.instance" = '0' -- choose all instances or one particular AND RESOURCE_ATTRIBUTES:"snow.service.container.name" != 'snowflake-ingress' --skip logs from internal sidecar ORDER BY timestamp ASC;

Package conflicts¶

Two systems dictate the packages installed in the service container: the model itself and the inference server. To minimize conflicts with your model’s dependencies, the inference server requires only the following packages:

gunicorn<24.0.0

starlette<1.0.0

uvicorn-standard<1.0.0

Make sure your model dependencies, along with the above, are resolvable by pip or conda, whichever you use.

If a model has both conda_dependencies and pip_requirements set, these will be installed as follows via conda:

Channels:

conda-forge

nodefaults

Dependencies:

all_conda_packages

- pip:

all_pip_packages

Snowflake gets Anacaonda packages from conda-forge when building container images because the Snowflake conda

channel is available only in warehouses, and the defaults channel requires users to accept Anaconda terms of use,

which isn’t possible during an automated build. To obtain packages from a different channel, such as defaults, specify

each package with the channel name, as in defaults::pkg_name.

Note

If you specify both conda_dependencies and pip_requirements, the container image builds successfully even if

the two sets of dependencies are not compatible, which might cause the resulting container image not to work as you expect.

Snowflake recommends using only conda_dependencies or only pip_requirements, not both.

Service out of memory¶

Some models are not thread-safe, so Snowflake loads a separate copy of the model in memory for each worker process. This can

cause out-of-memory conditions for large models with a higher number of workers. Try reducing num_workers.

Unsatisfactory query performance¶

Usually, inference is bottlenecked by the number of instances in the inference service. Try passing a higher value for max_instances when deploying the model.

Unable to alter the service spec¶

The specifications of the model build and inference services cannot be changed using ALTER SERVICE. You can only change attributes such as TAG, MIN_INSTANCES, and so forth. Since the image is published in the image repo, however, you can copy the spec, modify it, and create a new service from it, which you can start manually.

Package not found¶

Model deployment failed during image building phase. model-build logs suggest that a requested package was not found.

(This step uses conda-forge by default if the package is mentioned in conda_dependencies.)

Package installation can fail for any of the following reasons:

The package name or version is invalid. Check the spelling and version of the package.

The requested version of the package does not exist in

conda-forge. You can try removing the version specification to get the latest version that is available inconda-forge, or usepip_requirementsinstead. You can browse all available packages here.Sometimes, you may need a package from a special channel (eg

pytorch). Add achannel_name::prefix to the dependency, such aspytorch::torch.

Huggingface Hub version mismatch¶

A Hugging Face model inference service can fail with the error message:

ImportError: huggingface-hub>=0.30.0,<1.0 is required for a normal functioning of this module, but found huggingface-hub==0.25.2

This is because the transformers package does not specify the correct dependencies on huggingface-hub but

instead checks in the code. To resolve this problem, log the model again, this time explicitly specifying the required version of

huggingface-hub in the conda_dependencies or pip_requirements.

Torch not compiled with CUDA enabled¶

The typical cause of this error is that you have specified both conda_dependencies and pip_requirements. As mentioned in Package conflicts section,

conda is the package manager used for building the container image. Anaconda does not resolve packages from conda_dependencies and

pip_requirements together and gives conda packages precedence. This can lead to a situation where the conda packages are not compatible with

the pip packages. You might have specified torch in the pip_requirements, not in the conda_dependencies. Consider consolidating the

dependencies into either conda_dependencies or pip_requirements. If that is not possible, prefer specifying the most important packages in

conda_dependencies.

Use a custom image repository for inference images¶

When you deploy a model to Snowpark Container Services, Snowflake Model Serving builds a container image that holds the

model and its dependencies. To store this image, you need an image repository. Snowflake provides a default image

repository, which is used automatically if you don’t specify a repository when creating the service. To use a different

repository, pass its name in the image_repo argument of the create_service method.

To use an image repository that you do not own, make sure the role that will build the container image has the READ, WRITE, SERVICE READ, and SERVICE WRITE privileges on the repository. Grant these privileges as follows:

GRANT READ ON IMAGE REPOSITORY my_inference_images TO ROLE myrole;

GRANT WRITE ON IMAGE REPOSITORY my_inference_images TO ROLE myrole;

GRANT SERVICE READ ON IMAGE REPOSITORY my_inference_images TO ROLE myrole;

GRANT SERVICE WRITE ON IMAGE REPOSITORY my_inference_images TO ROLE myrole;