Snowflake Data Clean Room: External data from Google Cloud Platform¶

Note

Snowflake Data Clean Rooms do not currently support data subject consent management. Customers are responsible for ensuring they have obtained all necessary rights and consents to use the data linked in their clean rooms. Customers must also ensure compliance with all applicable laws and regulations when using Data Clean Rooms, including in connection with third-party connectors.

Data analyzed in a Snowflake Data Clean Room can be native to Snowflake, reside externally in cloud provider storage, or both. A connector allows collaborators to access external data from a cloud provider from within the clean room.

The external data connector uses Snowflake external tables to make data available. Be aware that there is an increased security risk associated with linking external tables in a clean room. As a result, the provider must explicitly allow the use of external tables in the clean room before consumers can use a connector to include external data. If the provider uses the external data connector, the consumer is warned that external tables are being used so they can decide whether to install the clean room.

This topic describes how to use a connector so clean room analysts can access external data from a Google Cloud Platform bucket.

Important

Third-party connectors are not offered by Snowflake and may be subject to additional terms. These integrations are made available for your convenience, but you are responsible for any content sent to or received from the integrations.

Customers are responsible for obtaining any necessary consents in connection with their use of Snowflake Data Clean Rooms. Please ensure that you are complying with applicable laws and regulations when using Snowflake Data Clean Rooms, including in connection with third-party connectors for activation purposes.

Prerequisites¶

To use the connector for external data:

The provider must explicitly allow the use of external tables in the clean room.

Files must be in parquet format.

Connect to a Google Cloud Platform bucket¶

Allowing clean room collaborators to access data from Google Cloud Platform (GCP) storage consists of the following steps:

In GCP, obtain the URL of the GCP bucket.

In the clean room environment, create the connector.

In GCP, grant permissions to the connector.

In the clean room environment, authenticate the connector with GCP.

The following sections discuss these steps in more detail.

Obtain the URL of the GCP bucket¶

The clean room connector needs the URL of the GCP storage bucket in order to access the data. Before creating the connector, you must:

Sign in to Google Cloud Platform Console as a project editor.

From the Console dashboard, select Cloud Storage » Browser.

Select the bucket that contains the data you want to access from the clean room, and navigate to the location of that data. The bucket cannot be empty.

Select the copy icon to copy the URL of the storage bucket and save it for the next task.

Create the connector and copy the service account identifier¶

You are now ready to create the connector in the clean room environment. Once you have created the connector, you need to copy details about its service account so it can be associated with the bucket in GCP. To create the connector in your clean room environment:

In the left navigation, select Connectors, then expand the Google Cloud section.

In the Storage bucket URL field, enter the URL that you copied from GCP, then replace

https://withgcs://in the URL.Select Create. The clean room generates a service account that it uses to access GCP.

Use the Copy icon to copy the identifier of the service account, and save it for the next task.

Grant permissions to the connector¶

Clean rooms need permission to access external data in the GCP bucket. Granting these permissions consists of creating a dedicated GCP role for the connector’s service account, then adding the service account as a principal of the GCP bucket.

To create the dedicated GCP role for the connector’s service account:

Sign in to the Google Cloud Platform Console as a project editor.

From the Console dashboard, select IAM & admin » Roles.

Select Create Role.

Enter a name and description for the role.

Select Add Permissions, then add the following permissions:

storage.buckets.get

storage.objects.list

storage.objects.get

Now that you have created a dedicated role, you are ready to associate the connector’s service account as a principal of the GCP bucket. To associate the service account:

Sign in to Google Cloud Platform Console as a project editor.

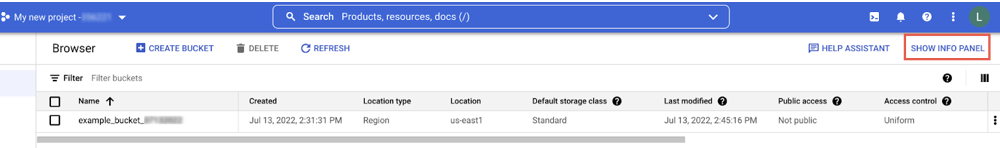

From the Console dashboard, select Cloud Storage » Browser.

Select the bucket that contains the external data.

Select Show Info Panel. The information panel slides open.

Select Add Principals.

In the New Principals text box, paste the service account identifier that you copied from the clean room.

From the Select a role drop-down list, select the dedicated role you created for the service account.

Authenticate the connector¶

You are now ready to authenticate the connector to make sure it can access the GCP bucket. To authenticate the connector:

In the left navigation of the clean room, select Connectors and expand the Google Cloud section. If you are signed out of the clean room, see Sign in to the clean rooms UI.

Select the GCP bucket you are connecting to, and select Authenticate.

Remove access to external data on GCP¶

To remove access to a GCP bucket from a clean room environment:

In the left navigation, select Connectors, then expand the Google Cloud section.

Find the GCP bucket that is currently connected, and select the trash can icon.